The world is increasingly becoming connected, intelligent, and autonomous. At the core of this transformation is Artificial Intelligence (AI), which is swiftly transitioning from the cloud to the edge, nearer to where data is generated and actions are performed. This shift, often referred to as Embedded AI, is redefining how we design embedded systems. It is creating a demand for specialized hardware capable of performing complex Machine Learning (ML) tasks with efficiency and low latency.

The proliferation of IoT devices, autonomous vehicles, smart cities, and industrial automation demands real-time processing and decision-making capabilities that cannot always rely on constant cloud connectivity. Latency, bandwidth limitations, and privacy concerns are driving the imperative to integrate AI directly into embedded devices. This presents a unique set of challenges and opportunities for hardware architects and embedded system designers.

The Rise of Edge AI: Why Now?

Several factors are driving the rapid adoption of Edge AI today:

- Improved Hardware Efficiency: Advanced semiconductors and AI accelerators deliver high-performance inference within limited power budgets.

- Optimized AI Models: Techniques like quantization, pruning, and knowledge distillation enable smaller, efficient models for constrained devices.

- Connectivity Limits: While 5G reduces latency, sending raw data to the cloud is costly; edge AI ensures local, immediate decision-making.

- Privacy & Security: On-device processing safeguards sensitive information, reducing risks tied to cloud transmission.

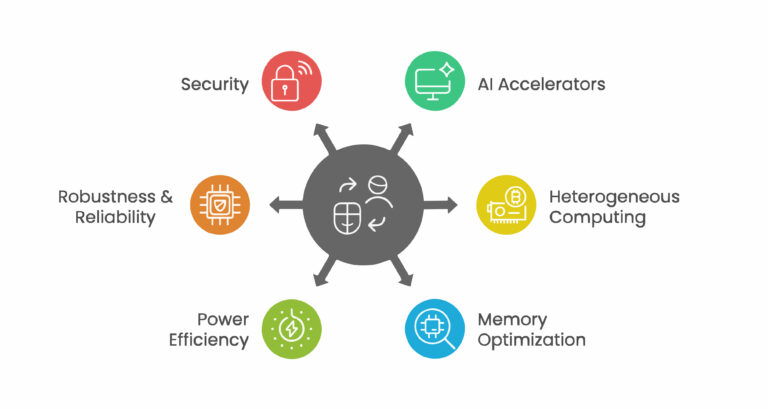

Key Hardware Trends for Embedded AI

Designing hardware for machine learning on the edge requires a holistic approach, considering not just processing power but also memory, power consumption, thermal management, and robust packaging.

1. Specialized AI Accelerators

General-purpose CPUs are not optimized for the parallel computations inherent in ML algorithms. This has led to the rise of dedicated AI accelerators:

- Neural Processing Units (NPUs): These are specifically designed to speed up neural network operations, offering high performance per watt. They often feature large numbers of MAC (multiply-accumulate) units.

- GPUs (Graphics Processing Units): While traditionally used for graphics, GPUs’ parallel architecture makes them highly effective for ML training and, increasingly, inference in more powerful edge devices..

- FPGAs (Field-Programmable Gate Arrays): FPGAs offer flexibility and reconfigurability, allowing developers to customize hardware logic to precisely match the requirements of specific ML models. This can lead to highly optimized performance for certain applications.

- ASICs (Application-Specific Integrated Circuits): For high-volume applications where performance and power efficiency are paramount, custom ASICs offer the ultimate optimization, albeit with higher upfront development costs.

Understand More: Accelerating Edge Intelligence with FPGA Co-Processors: Best Practices for Real-Time Analytics

2. Heterogeneous Computing Architectures

The most effective design of embedded system solutions often combines different types of processing units. A typical edge AI system might integrate a CPU for control tasks, an NPU for ML inference, and a GPU for more intensive parallel processing or image pre-processing. Orchestrating these diverse units efficiently is a key design challenge.

3. Memory Optimization

ML models can be memory-intensive. Edge devices often have limited on-chip or external memory. Strategies include:

- High-Bandwidth Memory (HBM): For higher-performance edge devices, HBM can provide the necessary data throughput.

- On-chip Memory Hierarchies: Carefully managing caches and local memories is crucial to minimizing off-chip memory access, which is power-intensive and slower.

- Quantization: Reducing the precision of model weights and activations (e.g., from 32-bit floating point to 8-bit integers) significantly reduces memory footprint and computational requirements.

4. Power Efficiency

Edge devices are often battery-powered or operate within strict power budgets. Low-power design techniques are critical:

- Dynamic Voltage and Frequency Scaling (DVFS): Adjusting power and clock speed based on workload.

- Power Gating: Shutting down unused parts of the chip.

- Specialized Low-Power Modes: Components designed for ultra-low power consumption during idle or low-activity periods.

5. Robustness and Reliability

Embedded AI hardware must operate reliably in diverse and often harsh environments. This includes considerations for:

- Thermal Management: Efficient heat dissipation is essential for maintaining performance and device longevity.

- Electromagnetic Compatibility (EMC): Ensuring the device doesn’t interfere with or is not affected by other electronic systems.

- Shock and Vibration Resistance: Especially important for industrial and automotive applications.

6. Security at the Edge

As devices become more intelligent and connected, they become attractive targets for attacks. Hardware-level security features are becoming indispensable:

- Secure Boot: Ensuring only trusted software can run on the device.

- Hardware Root of Trust: A secure foundation for cryptographic operations.

- Memory Protection Units (MPUs): Preventing unauthorized access to critical memory regions.

- Trusted Execution Environments (TEEs): Isolated environments for secure processing of sensitive data and ML models.

Learn More: Edge AI in Embedded Systems: Bringing AI Close to Devices

Challenges and Future Directions

While the promise of Embedded AI is immense, several challenges remain. Integrating diverse hardware components and optimizing software stacks for various accelerators is highly complex. Additionally, managing the entire lifecycle of an AI model on an edge device, from training to deployment and updates, requires sophisticated embedded system design expertise.

Future trends will likely include:

- Even Greater Specialization: Further tailored AI accelerators for specific ML tasks (e.g., vision, natural language processing).

- Neuromorphic Computing: Hardware that mimics the human brain’s structure and function, offering ultra-low power and event-driven processing.

- On-Device Learning: Edge devices can train and learn from new data on their own. This allows them to adapt to different environments without needing to frequently retrain in the cloud.

- Standardization: Efforts to create more unified software frameworks and hardware interfaces to simplify the development and deployment of Edge AI solutions.

The evolution of Embedded AI is a testament to human ingenuity. By effectively blending hardware innovation with intelligent software, we are pushing the boundaries of what is possible at the very edge of our digital world. The demand for skilled engineers capable of navigating the intricacies of designing embedded system solutions for this new paradigm will only continue to grow.

Empowering Edge AI Innovation with Tessolve

At Tessolve, we understand the critical importance of robust and efficient hardware for the success of Embedded AI. As a leading engineering solution provider, we empower our clients to bring their cutting-edge Edge AI visions to life. From initial concept to silicon realization and beyond, our comprehensive suite of services covers every aspect of embedded system design. We specialize in crafting optimized silicon for machine learning workloads, including verification and validation of complex heterogeneous architectures, ensuring your AI accelerators perform flawlessly. With our deep expertise in advanced testing methodologies and our commitment to an advanced design solution approach, Tessolve helps you navigate the complexities of power optimization, memory management, and security. This accelerates your time to market with reliable, high-performance Edge AI products. Let us be your trusted partner in shaping the future of intelligent devices.